Franky - Interactive Music Generation

Franky is an AI-driven installation that generates music from the atmosphere of a room. It combines human movement, colour atmosphere, and a unique memory architecture to create soundscapes that evolve with their audience.

Inputs: Movement and Colour

Human Movement

- A video camera captures the people present.

- Pose and gait recognition models extract skeletal keypoints.

- These poses are stored in memory, filtered, and translated into MIDI events - notes, rhythms, and dynamic changes.

- Stillness, walking, groups, or sudden gestures each produce different sonic textures.

Colour Atmosphere

- The camera frame is divided into four sectors (quadrants).

- In each sector, Franky samples a grid: the center point plus eight surrounding points.

- It computes the average colour for that sector.

- Over time, Franky tracks both the dominant colour and its change/motion.

- Colours contribute to the room mood:

- Warm hues → higher energy

- Cool hues → calmness

- Fast shifts → agitation

Memory Architecture

Franky doesn't just react in the moment - it remembers. Its layered memory system is inspired by human psychology, distinguishing between fleeting gestures and meaningful Episodes.

What is an Episode?

- An Episode is a bounded interval of time where movement or colour patterns deviate strongly from the baseline.

- Episodes are built from short-term windows of features like speed variance, tension, synchrony, expansion.

- Each Episode is stored with a summary vector: feature stats, colour state, context (time, crowd size), and an embedding for similarity search.

- Episodes can be compared (e.g. via k-NN) and clustered, giving Franky a sense of what usually happens versus what stands out.

Memory Layers

-

Short-Term Memory (seconds) → raw pose data, colours, quick gestures, continuously overwritten.

-

Session Memory (~30 min) → rolling summaries, helps judge whether a new Episode is significant.

-

Long-Term Memory (hours → always) → rare, unusual Episodes preserved, forming Franky's evolving identity.

-

Blended Atmosphere Model → C(t) = α · short-term state + β · session deviation + γ · episode memory

This ensures Franky is:

- Reactive → responds instantly.

- Context-aware → compares with the session baseline.

- Identity-driven → adapts uniquely over time.

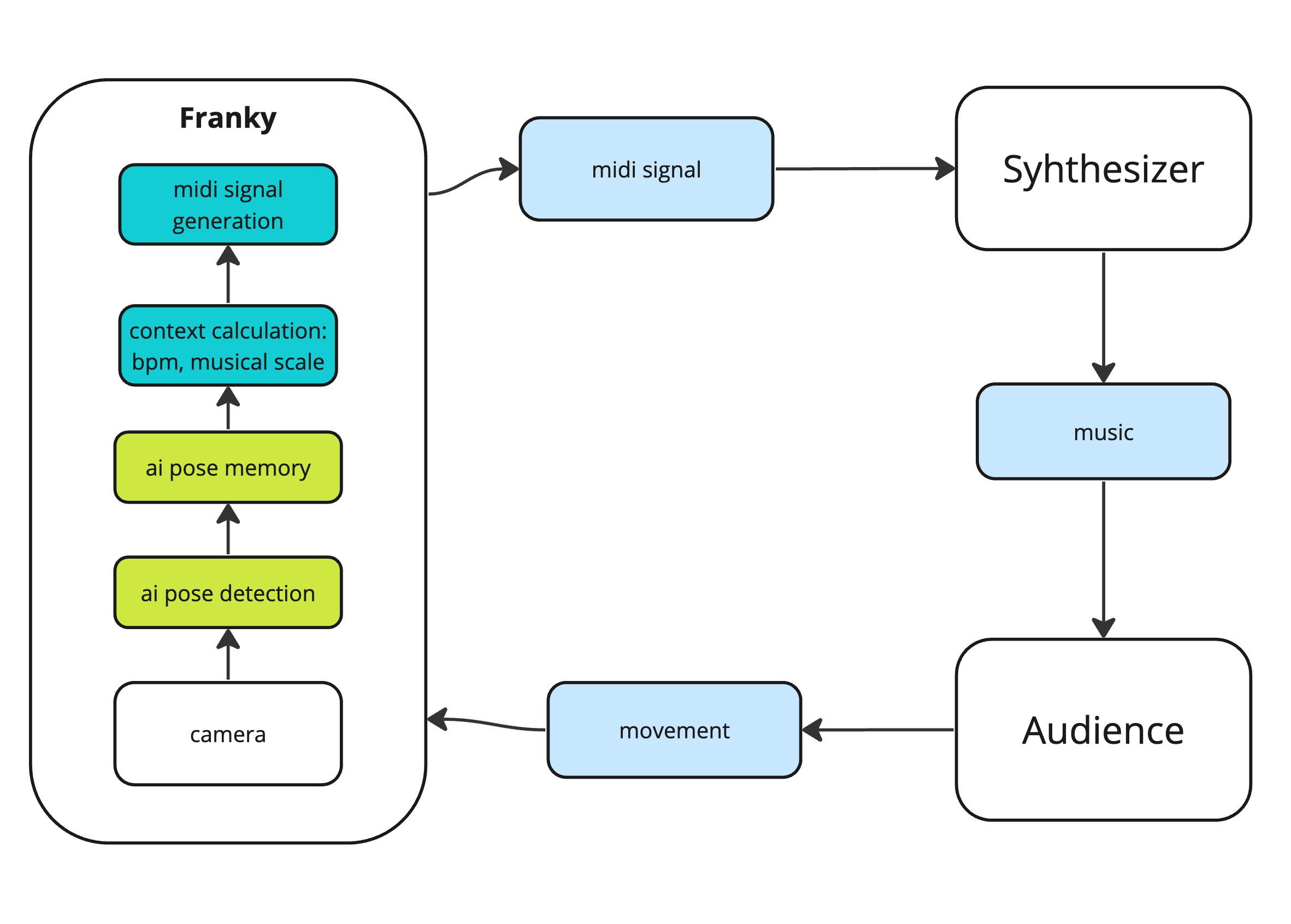

Technical Architecture

Franky is a distributed system with multiple specialized components that work together in real time.

Core Components

- Pose Recognition (Python) → extracts skeletal keypoints.

- MIDI Bridge (Python) → converts control signals into MIDI events.

- Atmosphere Recognition (Rust) → processes colour/movement features.

- Memory & Event Engine (Rust) → implements memory layers & Episode detection.

- Database (Identity) → stores atmosphere snapshots, event histories, long-term memories.

- UI Layer (Electron + React + TypeScript) → controls, monitoring, real-time feedback.

System Design

- A message broker coordinates communication between components.

- Each module runs as an independent service but shares a common event bus.

- Real-time updates ensure that changes instantly influence the sound.

- The database ensures continuity and evolving identity.

Deployment Goal

- Web application: runs in-browser with webcam input, outputs adaptive audio stream, scalable across users/venues.

How Franky Generates Music

Franky creates music by combining AI-driven pattern generation, scene logic, and two complementary playback systems: MIDI and FMOD.

Franky creates music by combining AI-driven pattern generation, scene logic, and two complementary playback systems: MIDI and FMOD.

Why MIDI?

-

MIDI = Musical Instructions, not Audio. Think of MIDI like a musical score: it says what note to play, how long, how loud - but not how it sounds. A single MIDI file can be played by a piano, a synth, or even a drum machine, each giving a different character.

-

Advantages of MIDI over direct sound generation:

- Flexibility: One set of patterns can drive many instruments (hardware synths, VSTs, or live bands).

- Efficiency: MIDI is lightweight (kilobytes), so AI can generate patterns quickly, bar by bar.

- Editability: Musicians can tweak Franky's output in their DAW, revoice it, or layer it with their own playing.

- Longevity: MIDI is a 40-year-old standard - it works everywhere from club gear to modern laptops.

By starting with symbolic music instead of raw sound, Franky stays versatile: the same movement in the room could trigger a soft piano in one setup, or an aggressive techno synth in another.

What is FMOD?

- FMOD is a professional audio engine used in games, VR, and interactive installations.

- Instead of producing raw sound from scratch, it manages audio stems (pre-recorded instrument layers, textures, effects).

- Franky tells FMOD when to switch scenes, fade layers, or adjust filters - like a live producer sitting at the mixing desk.

Why FMOD matters for Franky:

- Production Quality: FMOD ensures Franky doesn't just "make notes," it sounds polished and mix-ready.

- Real-time Control: Parameters like intensity, tension, brightness, and space can shape the sound instantly.

- Scene Transitions: FMOD handles smooth bar-synced changes (Calm → Build → High → Release), avoiding abrupt cuts.

- Hybrid Approach: While MIDI drives symbolic instruments, FMOD ensures the installation always sounds full and professional - even without external gear.

Together: MIDI + FMOD

- MIDI = the score and improvisation system (flexible, editable, scalable).

- FMOD = the polished audio renderer (ready-to-play, adaptive, immersive).

- Running side by side means Franky can:

- Drive up to 16 external instruments via MIDI.

- Render a professional soundtrack via FMOD.

- Keep both in sync with the same control signals.

The result: a living soundtrack that adapts to the audience, flexible for musicians and impressive for non-technical listeners.

1. Control Signals

- Derived from pose, colour, memory.

- Continuous parameters: intensity, tension, brightness, density, space.

- Discrete scenes: Calm, Build, High, Release.

2. Symbolic Music (MIDI)

- AI models generate bar-by-bar patterns.

- Up to 16 MIDI channels → drums, bass, pads, leads, FX, arps, textures.

- Patterns grouped into playlists/scenes mirroring FMOD.

3. Layered Audio (FMOD)

- FMOD renders stems (pad, bass, percussion, textures).

- Parameters control layering, filters, reverb, brightness.

- Scenes organize horizontal form (Calm → Build → High → Release).

4. MIDI + FMOD Together

- MIDI = symbolic patterns for DAWs & hardware.

- FMOD = polished adaptive stems.

- Both share control signals → always in sync.

5. Result

Franky is a conductor system:

- Drives up to 16 instruments via MIDI.

- Plays adaptive soundscapes via FMOD.

- Keeps both synchronized.

Competitors & Related Projects

Franky is a webcam-driven interactive music generator using pose estimation, novelty detection, memory tiers, and MIDI mapping.

Here are existing projects and concepts in the same creative space:

Direct Webcam-to-Music Interfaces

Parab0xx (AlgoMantra Labs)

- What: Webcam + projector interface where light sources (candles/phones) trigger tabla samples when virtual objects overlap.

- How it's similar: Uses webcam for generative sound, though more like sample triggering.

- Ref: Wired article

NeoLightning

- What: Academic reimagination of the Buchla Lightning using MediaPipe + Max/MSP. Gestures control sound in real time.

- How it's similar: Webcam gestures → expressive sound mapping.

- Ref: Arxiv preprint

Flurry (Gallery Installation)

- What: Interactive installation interpreting gestures into generative soundscapes.

- How it's similar: Webcam → sound, more artistic/ambient focus.

- Ref: E-garde project

Merton (Chatroulette Piano Guy)

- What: Human improviser responding musically to webcam strangers.

- How it's similar: Webcam-as-trigger for musical interaction.

- Ref: Wired coverage

Webcam Interactive Music Videos

Azealia Banks — Wallace

- What: Music video where viewer’s webcam feed is embedded in real time.

- How it's similar: Viewer becomes part of the audiovisual experience.

- Ref: Pitchfork news

Browser / Online Experiments

BlokDust

- What: Browser-based generative synth playground (drag-and-drop nodes).

- How it's similar: Interactive web music, sometimes webcam/visual input.

- Ref: Google Experiments

Why Franky is Different

- Uses pose estimation + novelty detection instead of direct gesture mapping.

- Keeps a memory hierarchy (ST/MT/LT) to evolve over time.

- Outputs structured MIDI for external synths/DAWs, not just audio samples.

- Designed for scene logic (Calm / Flow / Hype) with adaptive thresholds (“personality drift”).

Monetization Plan & Strategy

Phase 1 - Artist Collaborations & Showcases

- Collaborations with DJs, producers, and performance artists to build credibility and visibility.

- Presentations & live installations in galleries, clubs, and festivals.

- Revenue: sponsorships, performance fees, co-branded packs.

- In parallel, develop a minimal web app (camera in → audio out) to demonstrate Franky as a service.

Phase 2 - Web App Release

- Refine the web app into a stable product with:

- Subscriptions (cloud model updates, new packs).

- Style packs (techno, house, ambient, hip-hop, etc).

- Start onboarding early adopters (artists, small communities).

Progress Log

august 23 2025

- Set up pose tracking on pi from mirror repository

- play around with fmod

august 24

- move pose metrics from abandoned kmm repo to rust - partial

- dicide to include illumination tracking

- define a roadmap

august 25

- define Hungarian pose tracking

- move derivative metrics from kmm repo

- define derivatives per frame, not finished yer

august 26,27,28

- define all the derivatives

- remove the ones that should not be :)

- add runtime - take pose, make calculations, store, filter, smooth

- cover with tests

- extract tracking hyperparams into config.yaml - in progress

august 29

- cleanup tracking, cover with tests

- add aggregate task, named as sliding

- add sliding parameters (not finished)

- idea to log sliding in databas and in mqtt - in todo

- also todo aggregation config